bachelor thesis project.

game template.

professional work.

projects.

ui/ux.

different projects.

professional work.

abstract

trust in a future with ai.

helping the users to

get better results from ai.

explainable ai &

the black-box problem.

As artificial intelligence continues to advance, the relationship between explainability through visualization and trust becomes increasingly crucial. This thesis adds to the ongoing conversation surrounding trustworthy AI and XAI by highlighting the significance of achieving a balance between these factors, and by addressing the difficulties in effectively conveying these concepts through visualization. It is expected that a nuanced understanding of these elements will not only lead to ethically responsible AI systems but also foster

greater societal acceptance and confidence in the capabilities of artificial intelligence. This research delves into the topics of trust criterias towards AI and the significance of the interpretability and understanding the path taken by AI to generate a response. Through conducting interviews with the intended audience as well as professionals in the field of AI and XAI, a tool was developed to enhance the interpretability of AI’s operational process.

Explainable AI (XAI) addresses the "black box problem" inherent in traditional AI systems, where decisions are opaque and difficult to understand. This problem arises when AI algorithms make complex decisions without providing insight into the reasoning behind them, leading to distrust and uncertainty. XAI aims to open this black box by providing interpretability and understanding of AI decision-making processes. By doing so, it enhances transparency, builds trust, and enables users to comprehend why AI systems produce specific outcomes, ultimately fostering greater acceptance and confidence in AI technologies (Von Eschenbach, 2021; Reintjes, 2017).

development

Keywords: Explainable Artificial Intelligence, Generative Artificial Intelligence, Interpretability, Black-Box Problem, Visualizing Explainability, Midjourney

bachelor thesis project.

the problem

results

key pain points.

These are the main points gathered from literature and interviews about users' behavior with AI tools and their challenges with them.

Users struggle to keep up with the evolving vocabulary and fast pace of AI development.

» Users desire more support and guidance from AI to achieve desired results.

» A lack of knowledge about AI among users is identified.

» Roughly half of those surveyed (49%), have a limited understanding of AI, which leads to a lack of trust in it (Gillespie et al., 2023).

» Experts and researchers emphasize the importance of developing fair, unbiased systems that cater to a diverse range of users.

» There is a need for transparent processes to ensure data used for training does not cause issues.

» Experts express a lack of communication

and collaboration between developers and end-users as well as an understanding of end-users’ pain points using AI.

» XAI methods are difficult to apply, and a lot of testing is required to find the fitting method.

access to knowledge

fairness & biases

human-centered design

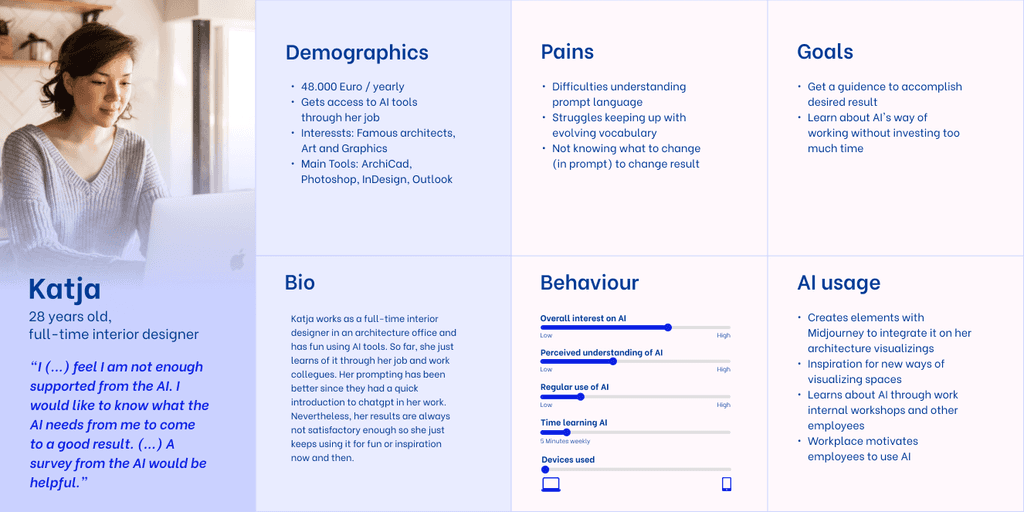

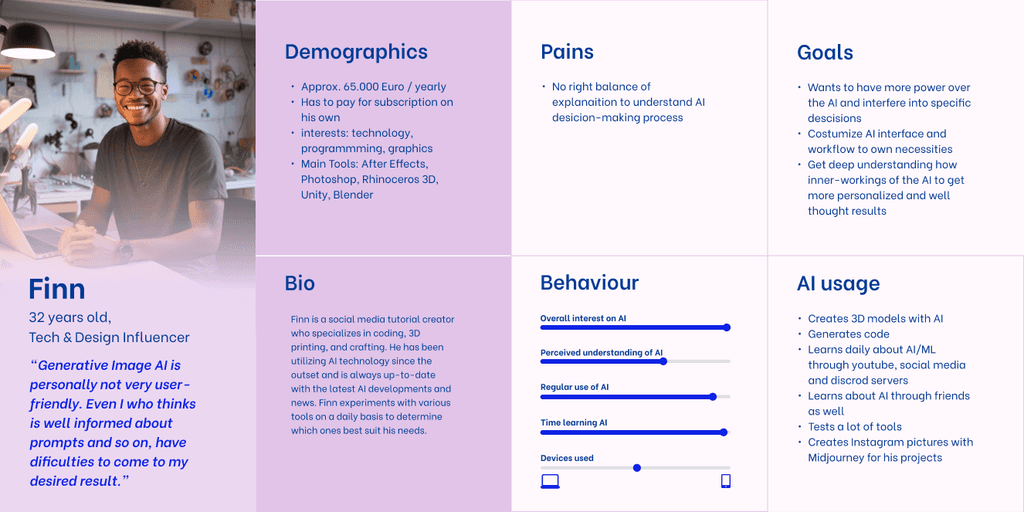

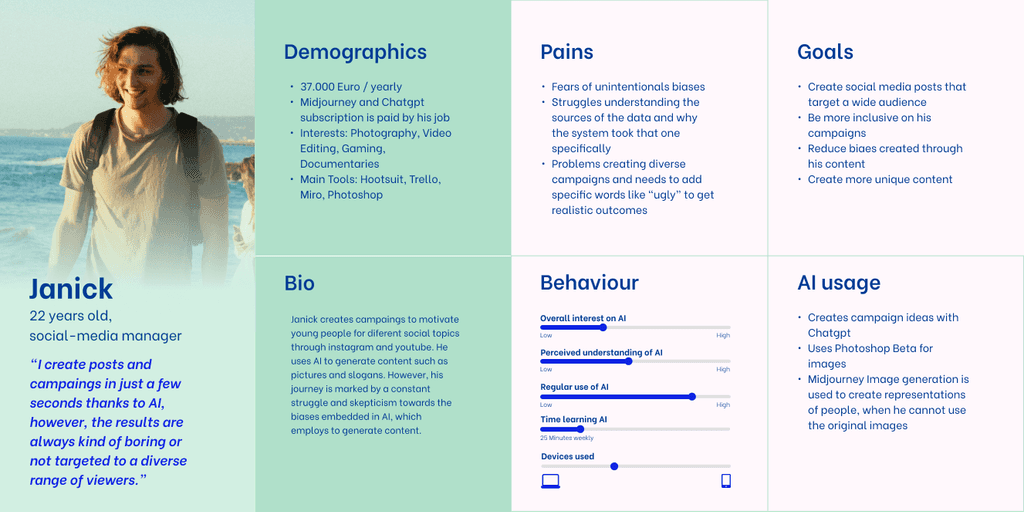

persona

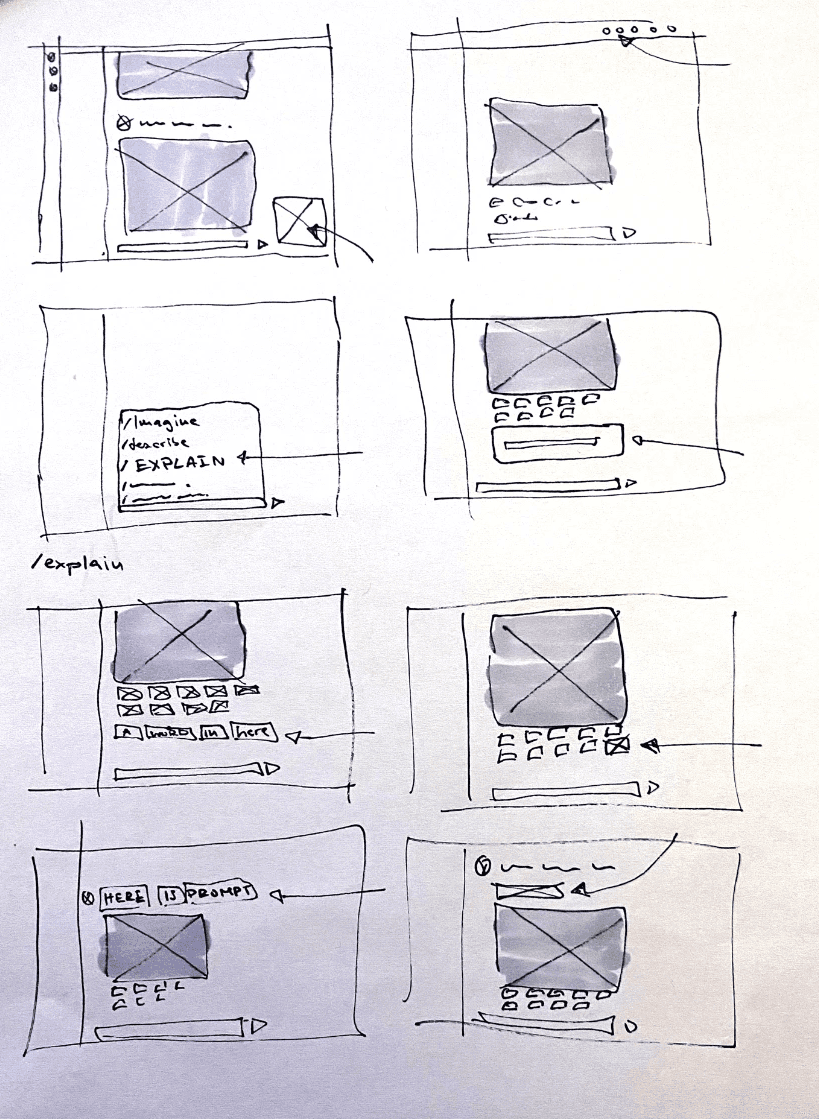

paper prototypes, position of explanation feature

paper prototypes, position of explanation feature

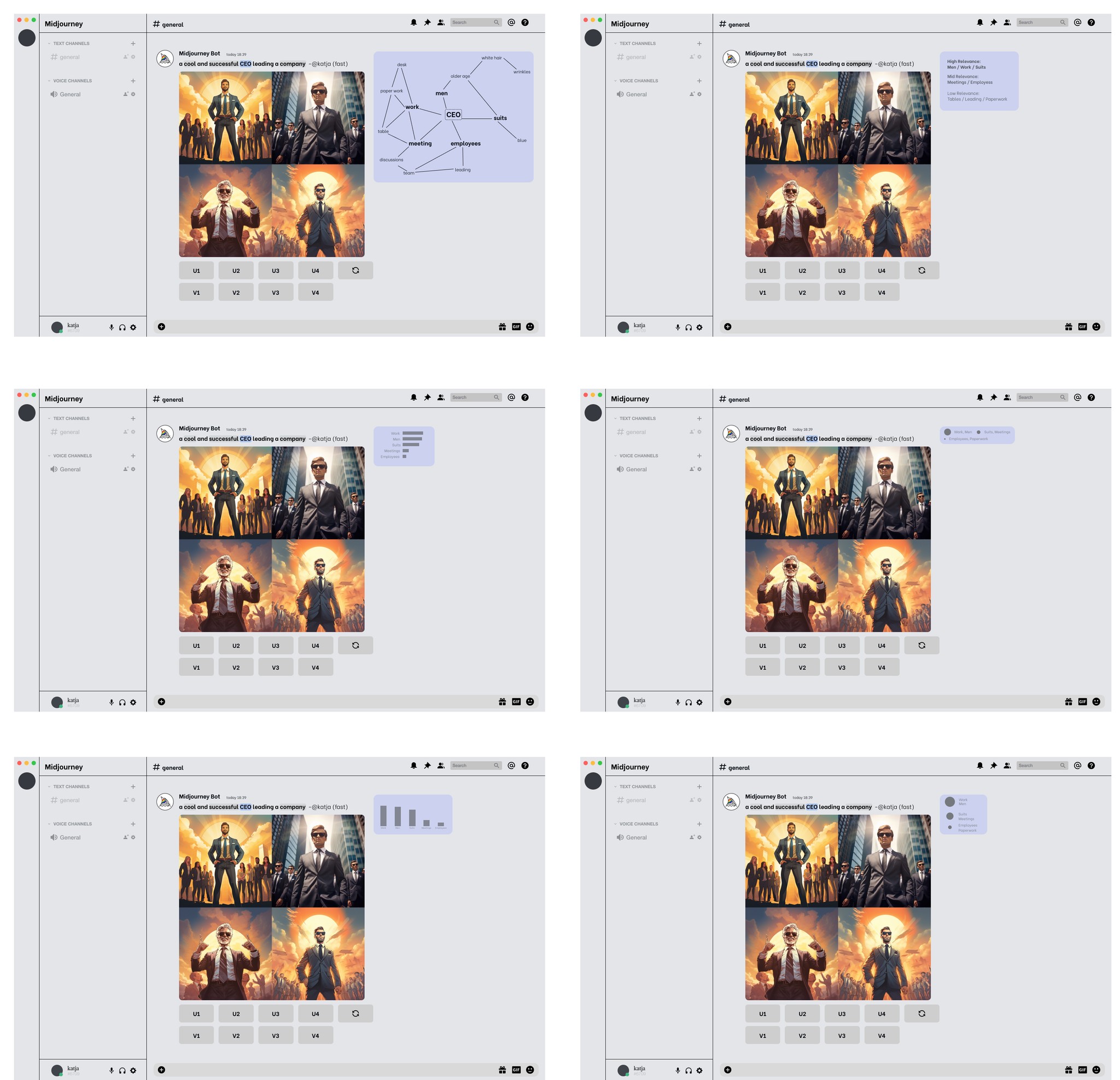

The prompt "a cool and successful CEO leading a company." was used to be tested by users.

The research paper "From Attribution Maps to Human-Understandable Explanations through Concept Relevance Propagation" introduces Concept Relevance Propagation (CRP) and Relevance Maximization (RelMax) to enhance machine learning model interpretability. CRP identifies key features in data, such as "fur" or "eyes" in images, answering questions like "What made the computer think it's a dog?" RelMax finds influential examples in training data. These methods are user-friendly and applicable across various software without extra prerequisites, aiding in error identification, bias mitigation, and transparency enhancement in AI systems. They contribute significantly to ensuring equitable deployment of artificial intelligence technologies.

understanding ai decisions: CRP and RelMax explained.

The result is a feature in Midjourney that explains the relationship of each word in the prompt to other concepts by highlighting their relevance to one another. For instance, when given the prompt 'cool,' there is a high probability that the generated image will feature sunglasses. By providing this explanation, users gain insight into how the AI generates its results, enabling them to refine their prompts and mitigate biases.

For the generative AI tool 'Midjourney', a new feature has been developed to elucidate and interpret the operational logic of the AI to users. This aims to enhance outcomes by fostering a deeper user understanding of the AI and it's way of "thinking".

wireframes.

result.